Are Facebook users aware they don't see everything in their newsfeed that their friends post, and if they are aware, what do they think about that?

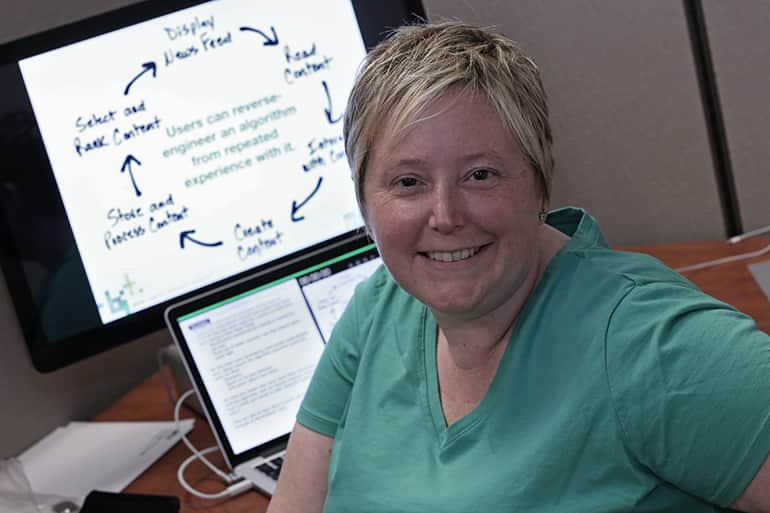

These questions are at the heart of a research study by Emilee Rader, AT&T Scholar and Assistant Professor in the Department of Media and Information.

"A lot of websites use recommender systems or personalization. When you log on to Amazon it suggests books for you to buy. That's the same kind of technology that Facebook uses in its newsfeed, except Facebook doesn't give you a choice. They don't say here's the list of possible newsfeed posts that you could be reading," Rader said.

Instead, Facebook selects what will show in a newsfeed based on a complicated formula where each possible post that could be shown is ranked with the top ones appearing in the newsfeed.

"What Facebook is trying to do is guess which posts you are more likely to want to see," Rader said. "One of the things we were wondering is whether people notice this is happening or are they totally unaware? We wanted to understand how users are reacting to this. What they think about it? Do they even notice it?"

What Rader found is that some people were not aware this was happening.

"There were a few people who were pretty sure this was happening, but most people were in the maybe to no range at the time we did the study (April 2014)," Rader said. "However, I think there is more public awareness now that there is a filtering algorithm."

Rader, whose research is supported by a $502,093 National Science Foundation (NSF) grant, wrote a paper on this study, titled "Understanding User Beliefs About Algorithmic Curation in the Facebook News Feed," co-authored by Ph.D. student Rebecca Gray.

Rader and Gray presented the paper at the CHI (Computer-Human Interaction) conference April 18-23 in Seoul, Korea, the most prestigious conference focused on Human-Computer Interaction, which attracts the world's leading researchers and practitioners in this field to share groundbreaking research and innovations related to how humans interact with digital technologies.

"What the paper was about was a study where we did a big survey that had an open-ended question on it where we asked people if they think they see everything that their friends posts and why they think so," Rader said. "It turned out that there were some people who were like ‘why wouldn't I be seeing everything?' But most people had actually noticed that there was something going on."

Some people in the survey even had tried to influence what appears in their newsfeed by their own actions in interacting with Facebook.

"People were noticing that the actions they did were having a cause and effect relationship to what they were seeing and were trying to do things to achieve certain goals in their newsfeed," Rader said. "For example, there were some people who had created a routine of going and visiting particular people's profile pages just to make sure they would start showing up in their newsfeed. It's sort of like search engine optimization, the things that people do to get their stuff ranked higher."

One goal of the research is to better understand the interaction between people and algorithms in an effort to design better systems.

"In a system like Facebook, essentially what you see in your news feed is the result of a combination of your own behavior and then what the algorithm is doing," Rader said. "There are more and more systems that are coming up that are like this. And so I feel like it's important to be able to understand and characterize what these interactions look like."

Facebook is not the only system that uses algorithms to decide what you see.

"Everywhere you interact with a recommender system, there is an algorithm that is choosing what to show you and what not to show you," Rader said.

The argument for the use of algorithms is if everything was allowed to go through, the experience would not be user friendly and no one would ever use these systems.

"I believe that to be true, but I also think that it's not perfect either, which is why it's worth studying," Rader said.

Rader has written a second paper about this research, which is currently under review. That paper is about people's reactions when they found out that there were things they were not seeing in their Facebook newsfeed.

Rader helps lead the Behavior, Information and Technology Lab (BITLab) within the College of Communication Arts and Sciences where she conducts her research.